认识

一、认识

AST 解析器 的开发主要分为两个部分来进行: 词法分析器和语法分析器。

二、词法分析器工作流

词法分析器, 也叫分词器(Tokenizer),它的作用是将代码划分为一个个词法单元,便于进行后续的语法分析。比如下面的这段代码:

let foo = function() {}

在经过分词之后,代码会被切分为如下的 token 数组:

['let', 'foo', '=', 'function', '(', ')', '{', '}']

从中你可以看到,原本一行普通的代码字符串被拆分成了拥有语法属性的 token 列表,不同的 token 之间也存在千丝万缕的联系,而后面所要介绍的语法分析器,就是来梳理各个 token 之间的联系,整理出 AST 数据结构。

当下我们所要实现的词法分析器,本质上是对代码字符串进行逐个字符的扫描,然后根据一定的语法规则进行分组。其中,涉及到几个关键的步骤:

-

确定语法规则,包括语言内置的关键词、单字符、分隔符等

-

逐个代码字符扫描,根据语法规则进行

token分组

2.1 确定语法规则

新建 src/tokenizer.ts,首先声明一些必要的类型:

export enum TokenType {

Let = "Let",

Const = "Const",

Var = "Var",

Assign = "Assign",

Function = "Function",

Number = "Number",

Operator = "Operator",

Identifier = "Identifier",

LeftParen = "LeftParen",

RightParen = "RightParen",

LeftCurly = "LeftCurly",

RightCurly = "RightCurly",

Comma = "Comma",

Dot = "Dot",

Semicolon = "Semicolon",

StringLiteral = "StringLiteral",

Return = "Return",

Import = "Import",

Export = "Export",

Default = "Default",

From = "From",

As = "As",

Asterisk = "Asterisk",

}

export type Token = {

type: TokenType;

value?: string;

start: number;

end: number;

raw?: string;

};

然后定义 Token 的生成器对象:

// 策略模式

const TOKENS_GENERATOR: Record<string, (...args: any[]) => Token> = {

let(start: number) {

return { type: TokenType.Let, value: "let", start, end: start + 3 };

},

const(start: number) {

return { type: TokenType.Const, value: "const", start, end: start + 5 };

},

var(start: number) {

return { type: TokenType.Var, value: "var", start, end: start + 3 };

},

assign(start: number) {

return { type: TokenType.Assign, value: "=", start, end: start + 1 };

},

import(start: number) {

return {

type: TokenType.Import,

value: "import",

start,

end: start + 6,

};

},

export(start: number) {

return {

type: TokenType.Export,

value: "export",

start,

end: start + 6,

};

},

from(start: number) {

return {

type: TokenType.From,

value: "from",

start,

end: start + 4,

};

},

as(start: number) {

return {

type: TokenType.As,

value: "as",

start,

end: start + 2,

};

},

asterisk(start: number) {

return {

type: TokenType.Asterisk,

value: "*",

start,

end: start + 1,

};

},

default(start: number) {

return {

type: TokenType.Default,

value: "default",

start,

end: start + 7,

};

},

number(start: number, value: string) {

return {

type: TokenType.Number,

value,

start,

end: start + value.length,

raw: value,

};

},

function(start: number) {

return {

type: TokenType.Function,

value: "function",

start,

end: start + 8,

};

},

return(start: number) {

return {

type: TokenType.Return,

value: "return",

start,

end: start + 6,

};

},

operator(start: number, value: string) {

return {

type: TokenType.Operator,

value,

start,

end: start + value.length,

};

},

comma(start: number) {

return {

type: TokenType.Comma,

value: ",",

start,

end: start + 1,

};

},

leftParen(start: number) {

return { type: TokenType.LeftParen, value: "(", start, end: start + 1 };

},

rightParen(start: number) {

return { type: TokenType.RightParen, value: ")", start, end: start + 1 };

},

leftCurly(start: number) {

return { type: TokenType.LeftCurly, value: "{", start, end: start + 1 };

},

rightCurly(start: number) {

return { type: TokenType.RightCurly, value: "}", start, end: start + 1 };

},

dot(start: number) {

return { type: TokenType.Dot, value: ".", start, end: start + 1 };

},

semicolon(start: number) {

return { type: TokenType.Semicolon, value: ";", start, end: start + 1 };

},

stringLiteral(start: number, value: string, raw: string) {

return {

type: TokenType.StringLiteral,

value,

start,

end: start + value.length + 2,

raw,

};

},

identifier(start: number, value: string) {

return {

type: TokenType.Identifier,

value,

start,

end: start + value.length,

};

},

};

type SingleCharTokens = "(" | ")" | "{" | "}" | "." | ";" | "," | "*" | "=";

// 单字符到 Token 生成器的映射

const KNOWN_SINGLE_CHAR_TOKENS = new Map<

SingleCharTokens,

typeof TOKENS_GENERATOR[keyof typeof TOKENS_GENERATOR]

>([

["(", TOKENS_GENERATOR.leftParen],

[")", TOKENS_GENERATOR.rightParen],

["{", TOKENS_GENERATOR.leftCurly],

["}", TOKENS_GENERATOR.rightCurly],

[".", TOKENS_GENERATOR.dot],

[";", TOKENS_GENERATOR.semicolon],

[",", TOKENS_GENERATOR.comma],

["*", TOKENS_GENERATOR.asterisk],

["=", TOKENS_GENERATOR.assign],

]);

2.2 代码字符扫描、分组

现在我们开始实现 Tokenizer 对象:

export class Tokenizer {

private _tokens: Token[] = [];

private _currentIndex: number = 0;

private _source: string;

constructor(input: string) {

this._source = input;

}

tokenize(): Token[] {

while (this._currentIndex < this._source.length) {

let currentChar = this._source[this._currentIndex];

const startIndex = this._currentIndex;

// 根据语法规则进行 token 分组

}

return this._tokens;

}

}

在扫描字符的过程,我们需要对不同的字符各自进行不同的处理,具体的策略如下:

-

当前字符为分隔符,如空格,直接跳过,不处理;

-

当前字符为字母,需要继续扫描,获取完整的单词:

-

如果单词为语法关键字,则新建相应关键字的

Token -

否则视为普通的变量名

-

-

当前字符为单字符,如

{、}、(、),则新建单字符对应的Token

三、语法分析器工作流

在解析出词法 token 之后,我们就可以进入语法分析阶段了。在这个阶段,我们会依次遍历 token,对代码进行语法结构层面的分析,最后的目标是生成 AST 数据结构。

3.1 AST 数据结构

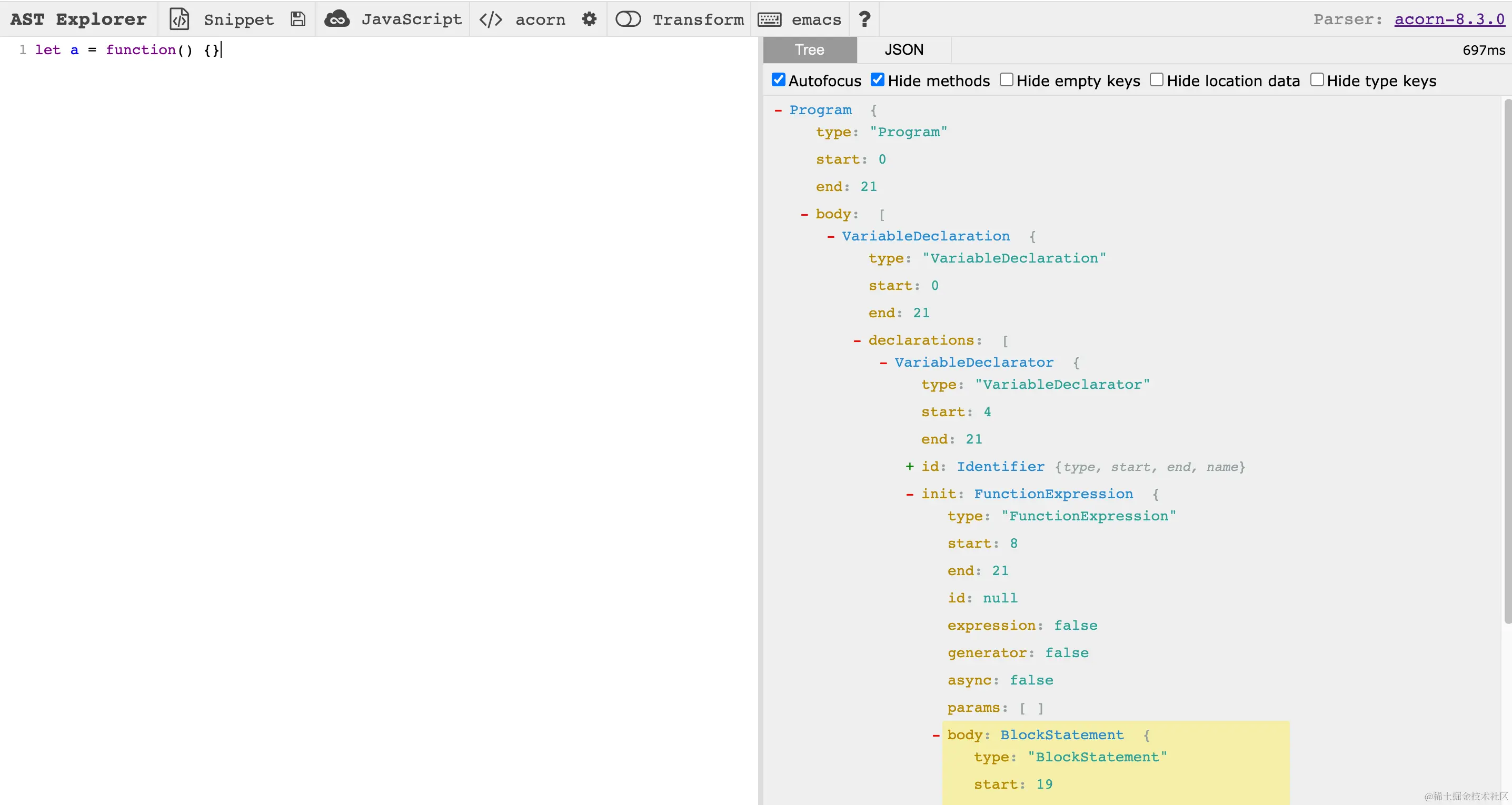

通过 AST Explorer 预览 let foo = function() {} 的 AST 数据结构。

接下来,我们要做的就是将 token 数组转换为上图所示的 AST 数据。

3.2 编写 AST 结构

首先新建src/node-types.ts, 添加如下的类型声明代码

export enum NodeType {

Program = "Program",

VariableDeclaration = "VariableDeclaration",

FunctionDeclaration = "FunctionDeclaration",

Identifier = "Identifier",

BlockStatement = "BlockStatement",

ExpressionStatement = "ExpressionStatement",

ReturnStatement = "ReturnStatement",

CallExpression = "CallExpression",

BinaryExpression = "BinaryExpression",

MemberExpression = "MemberExpression",

FunctionExpression = "FunctionExpression",

Literal = "Literal",

ImportDeclaration = "ImportDeclaration",

ImportSpecifier = "ImportSpecifier",

ImportDefaultSpecifier = "ImportDefaultSpecifier",

ImportNamespaceSpecifier = "ImportNamespaceSpecifier",

ExportDeclaration = "ExportDeclaration",

ExportSpecifier = "ExportSpecifier",

ExportDefaultDeclaration = "ExportDefaultDeclaration",

ExportNamedDeclaration = "ExportNamedDeclaration",

ExportAllDeclaration = "ExportAllDeclaration",

VariableDeclarator = "VariableDeclarator",

}

export enum FunctionType {

FunctionDeclaration,

CallExpression,

}

export interface Node {

type: string;

start: number;

end: number;

}

export interface Program extends Node {

type: NodeType.Program;

body: Statement[];

}

export interface Literal extends Node {

type: NodeType.Literal;

value: string;

raw: string;

}

export interface Identifier extends Node {

type: NodeType.Identifier;

name: string;

}

export interface CallExpression extends Node {

type: NodeType.CallExpression;

callee: Expression;

arguments: Expression[];

}

export interface MemberExpression extends Node {

type: NodeType.MemberExpression;

object: Identifier | MemberExpression;

property: Identifier;

computed: boolean;

}

export interface BlockStatement extends Node {

type: NodeType.BlockStatement;

body: Statement[];

}

export interface ExpressionStatement extends Node {

type: NodeType.ExpressionStatement;

expression: Expression;

}

export interface FunctionExpression extends FunctionNode {

type: NodeType.FunctionExpression;

}

export interface FunctionDeclaration extends FunctionNode {

type: NodeType.FunctionDeclaration;

id: Identifier | null;

}

export type VariableKind = "var" | "let" | "const";

export interface VariableDeclarator extends Node {

type: NodeType.VariableDeclarator;

id: Identifier;

init: Expression | Literal | null;

}

export interface VariableDeclaration extends Node {

type: NodeType.VariableDeclaration;

kind: "var" | "let" | "const";

declarations: VariableDeclarator[];

}

export interface ImportSpecifier extends Node {

type: NodeType.ImportSpecifier;

imported: Identifier;

local: Identifier;

}

export interface ImportDefaultSpecifier extends Node {

type: NodeType.ImportDefaultSpecifier;

local: Identifier;

}

export interface ImportNamespaceSpecifier extends Node {

type: NodeType.ImportNamespaceSpecifier;

local: Identifier;

}

export type ImportSpecifiers =

| (ImportSpecifier | ImportDefaultSpecifier)[]

| ImportNamespaceSpecifier[];

export interface ImportDeclaration extends Node {

type: NodeType.ImportDeclaration;

specifiers: ImportSpecifiers;

source: Literal;

}

export type Declaration =

| FunctionDeclaration

| VariableDeclaration

| ImportDeclaration

| ExportDeclaration

| VariableDeclarator;

export interface ExportSpecifier extends Node {

type: NodeType.ExportSpecifier;

exported: Identifier;

local: Identifier;

}

export interface ExportNamedDeclaration extends Node {

type: NodeType.ExportNamedDeclaration;

declaration: Declaration | null;

specifiers: ExportSpecifier[];

source: Literal | null;

}

export interface ExportDefaultDeclaration extends Node {

type: NodeType.ExportDefaultDeclaration;

declaration: Declaration | Expression;

}

export interface ExportAllDeclaration extends Node {

type: NodeType.ExportAllDeclaration;

source: Literal;

exported: Identifier | null;

}

export type ExportDeclaration =

| ExportNamedDeclaration

| ExportDefaultDeclaration

| ExportAllDeclaration;

export interface BinaryExpression extends Node {

type: NodeType.BinaryExpression;

left: Expression;

right: Expression;

operator: string;

}

export interface FunctionNode extends Node {

id: Identifier | null;

params: Expression[] | Identifier[];

body: BlockStatement;

}

export interface ReturnStatement extends Node {

type: NodeType.ReturnStatement;

argument: Expression;

}

export type Statement =

| ImportDeclaration

| ExportDeclaration

| VariableDeclaration

| FunctionDeclaration

| ExpressionStatement

| BlockStatement

| ReturnStatement;

export type Expression =

| CallExpression

| MemberExpression

| Identifier

| Literal

| BinaryExpression

| FunctionExpression;

3.3 实现 Parser 结构

新建src/parser.ts,添加 Parser 类的初始化代码:

export class Parser {

private _tokens: Token[] = [];

private _currentIndex = 0;

constructor(token: Token[]) {

this._tokens = [...token];

}

parse(): Program {

const program = this._parseProgram();

return program;

}

private _parseProgram(): Program {

const program: Program = {

type: NodeType.Program,

body: [],

start: 0,

end: Infinity,

};

// 解析 token 数组

return program;

}

}

从中你可以看出,解析 AST 的核心逻辑就集中在 _parseProgram 方法中,接下来让我们一步步完善一个方法:

export class Parser {

private _parseProgram {

// 省略已有代码

while (!this._isEnd()) {

const node = this._parseStatement();

program.body.push(node);

if (this._isEnd()) {

program.end = node.end;

}

}

return program;

}

// token 是否已经扫描完

private _isEnd(): boolean {

return this._currentIndex >= this._tokens.length;

}

// 工具方法,表示消费当前 Token,扫描位置移动到下一个 token

private _goNext(type: TokenType | TokenType[]): Token {

const currentToken = this._tokens[this._currentIndex];

// 断言当前 Token 的类型,如果不能匹配,则抛出错误

if (Array.isArray(type)) {

if (!type.includes(currentToken.type)) {

throw new Error(

`Expect ${type.join(",")}, but got ${currentToken.type}`

);

}

} else {

if (currentToken.type !== type) {

throw new Error(`Expect ${type}, but got ${currentToken.type}`);

}

}

this._currentIndex++;

return currentToken;

}

private _checkCurrentTokenType(type: TokenType | TokenType[]): boolean {

if (this._isEnd()) {

return false;

}

const currentToken = this._tokens[this._currentIndex];

if (Array.isArray(type)) {

return type.includes(currentToken.type);

} else {

return currentToken.type === type;

}

}

private _getCurrentToken(): Token {

return this._tokens[this._currentIndex];

}

private _getPreviousToken(): Token {

return this._tokens[this._currentIndex - 1];

}

}

一个程序(Program)实际上由各个语句(Statement)来构成,因此在_parseProgram逻辑中,我们主要做的就是扫描一个个语句,然后放到 Program 对象的 body 中。那么,接下来,我们将关注点放到语句的扫描逻辑上面。

从之前的示例代码:

let a = function() {}

我们可以知道这是一个变量声明语句,那么现在我们就在 _parseStatement 中实现这类语句的解析:

export enum NodeType {

Program = "Program",

VariableDeclarator = "VariableDeclarator",

}

export class Parser {

private _parseStatement(): Statement {

// TokenType 来自 Tokenizer 的实现中

if (this._checkCurrentTokenType(TokenType.Let)) {

return this._parseVariableDeclaration();

}

throw new Error("Unexpected token");

}

private _parseVariableDeclaration(): VariableDeclaration {

// 获取语句开始位置

const { start } = this._getCurrentToken();

// 拿到 let

const kind = this._getCurrentToken().value;

this._goNext(TokenType.Let);

// 解析变量名 foo

const id = this._parseIdentifier();

// 解析 =

this._goNext(TokenType.Assign);

// 解析函数表达式

const init = this._parseFunctionExpression();

const declarator: VariableDeclarator = {

type: NodeType.VariableDeclarator,

id,

init,

start: id.start,

end: init ? init.end : id.end,

};

// 构造 Declaration 节点

const node: VariableDeclaration = {

type: NodeType.VariableDeclaration,

kind: kind as VariableKind,

declarations: [declarator],

start,

end: this._getPreviousToken().end,

};

return node;

}

}

接下来主要的代码解析逻辑可以梳理如下:

-

发现

let关键词对应的token,进入_parseVariableDeclaration -

解析变量名,如示例代码中的

foo -

解析函数表达式,如示例代码中的

function() {}

其中,解析变量名的过程我们通过_parseIdentifier 方法实现,解析函数表达式的过程由_parseFunctionExpression来实现,代码如下:

// 1. 解析变量名

private _parseIdentifier(): Identifier {

const token = this._getCurrentToken();

const identifier: Identifier = {

type: NodeType.Identifier,

name: token.value!,

start: token.start,

end: token.end,

};

this._goNext(TokenType.Identifier);

return identifier;

}

// 2. 解析函数表达式

private _parseFunctionExpression(): FunctionExpression {

const { start } = this._getCurrentToken();

this._goNext(TokenType.Function);

let id = null;

if (this._checkCurrentTokenType(TokenType.Identifier)) {

id = this._parseIdentifier();

}

const node: FunctionExpression = {

type: NodeType.FunctionExpression,

id,

params: [],

body: {

type: NodeType.BlockStatement,

body: [],

start: start,

end: Infinity,

},

start,

end: 0,

};

return node;

}

// 用于解析函数参数

private _parseParams(): Identifier[] | Expression[] {

// 消费 "("

this._goNext(TokenType.LeftParen);

const params = [];

// 逐个解析括号中的参数

while (!this._checkCurrentTokenType(TokenType.RightParen)) {

let param = this._parseIdentifier();

params.push(param);

}

// 消费 ")"

this._goNext(TokenType.RightParen);

return params;

}

// 用于解析函数体

private _parseBlockStatement(): BlockStatement {

const { start } = this._getCurrentToken();

const blockStatement: BlockStatement = {

type: NodeType.BlockStatement,

body: [],

start,

end: Infinity,

};

// 消费 "{"

this._goNext(TokenType.LeftCurly);

while (!this._checkCurrentTokenType(TokenType.RightCurly)) {

// 递归调用 _parseStatement 解析函数体中的语句(Statement)

const node = this._parseStatement();

blockStatement.body.push(node);

}

blockStatement.end = this._getCurrentToken().end;

// 消费 "}"

this._goNext(TokenType.RightCurly);

return blockStatement;

}